Introduction

We wanted to share some insights about Kubernetes pods and how we can restart them using the kubectl CLI tool.

Before we dive into the methods of restarting pods, let's quickly go over some basics. Pods are essentially groups of containers that work together and share resources within Kubernetes.

Sometimes, these pods encounter issues and need to be restarted to keep our applications running smoothly.

Steps we will cover in this article:

- What are the lifecycle of a Pod in Kubernetes?

- Methods to Restart Pods using kubectl

- Best Practices when dealing with Pod restarts

What are the lifecycle of a Pod in Kubernetes?

The lifecycle of a Pod in Kubernetes begins when it is created, moves through various phases including Pending, Running, Succeeded, Failed, and Unknown, before it finally gets terminated.

Throughout this lifecycle, Pods are managed automatically by the control plane, ensuring they run as intended.

Pod Restart Policies

In Kubernetes, we have different policies for handling pod restarts:

- Always: This policy ensures that a pod is always restarted whenever it exits, maintaining continuous availability.

- OnFailure: With this policy, a pod is restarted only if it exits with a non-zero status, indicating an error or failure.

- Never: As the name suggests, this policy means that a pod will never be restarted, regardless of its exit status.

These policies provide granular control over the behavior of Pods, allowing us to specify how application is managed within the cluster.

Here's a simple example of how we define a pod's restart policy:

apiVersion: v1

kind: Pod

metadata:

name: deploy-pod

spec:

restartPolicy: OnFailure

containers:

- name: cicube-v2

image: nginx:latest

Here we defined a Kubernetes pod and sets its restart policy to OnFailure, meaning it will only be restarted if it fails.

Methods to Restart Pods using kubectl

Now, let's talk about how we can restart pods using the kubectl CLI tool. I'll walk you through each method with some command examples:

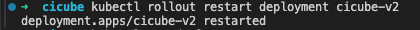

Using the kubectl rollout restart command

If you prefer a rolling restart approach, you can use the rollout restart command.

Let say we have pod deployment named "cicube-v2".

kubectl get pods

NAME READY STATUS RESTARTS AGE

cicube-v2-78f7dcfb49-nncf6 1/1 Running 1 (46h ago) 2d

nginx 0/1 Completed 0 2d1h

# Restart pods managed by a Deployment named cicube-v2

kubectl rollout restart deployment cicube-v2

This command starts a gradual restart of the pods, which helps to keep your application available with as little interruption as possible.

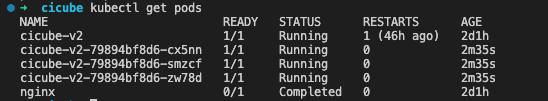

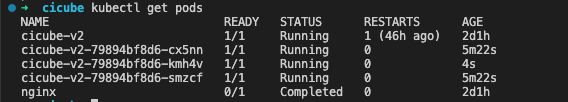

Afterwards, if I check the Pods again, we'll see the current running status has been updated.

NAME READY STATUS RESTARTS AGE

cicube-v2-78f7dcfb49-nncf6 0/1 Terminating 1 (46h ago)

cicube-v2-79894bf8d6-ghhfl 1/1 Running 0 11s

nginx 0/1 Completed 0 2d1h

Using kubectl scale command

By scaling down to 0 replicas and then back up, Kubernetes automatically replaces the existing pods with new ones, effectively restarting them.

# Scale down the number of replicas to 0

kubectl scale deployment cicube-v2 --replicas=0

// deployment.apps/cicube-v2 scaled

# Scale the number of replicas back up to restart the pods

kubectl scale deployment cicube-v2 --replicas=3

// deployment.apps/cicube-v2 scaled

Using the kubectl delete command

Deleting and recreating pods is another straightforward method to trigger a restart. Here's how you can do it:

# Delete a pod named my-pod

kubectl delete pod cicube-v2-79894bf8d6-zw78d

After removing it, Kubernetes automatically generates a new pod to replace the deleted one, essentially restarting it.

Using the kubectl set env command

To restart pods by modifying environment variables, you can use the set env command. Let's take a look:

# Set environment variable DATE to trigger pod restart

kubectl set env deployment cicube-deploy DATE=$(date)

This command updates the environment variable DATE, causing the pods to restart automatically.

Best Practices when dealing with Pod restarts

We wanted to share some best practices and considerations for restarting pods in Kubernetes since this might come in handy for new comers.

Ensuring minimal downtime during pod restarts

When restarting pods, it's crucial to keep any downtime to a minimum to ensure your application stays available. Here are some tips to help:

- Use rolling restarts: Instead of restarting all pods at once, opt for rolling restarts. This way, old pods are gradually replaced with new ones, ensuring your application keeps running smoothly.

- Leverage deployment strategies: Consider using deployment strategies like blue-green deployments or canary releases. These strategies help shift traffic to new pods gradually, avoiding downtime.

Monitoring pod health and logging during restart processes

Monitoring pod health and logging relevant information during restarts is essential for identifying and resolving any issues. Here's what you can do:

- Implement health checks: Set up readiness and liveness probes to monitor pod health. Readiness probes check if a pod is ready to serve traffic, while liveness probes ensure pods are running properly.

- Monitor resource usage: Keep an eye on resource metrics like CPU and memory usage. This helps detect any anomalies that could affect pod performance during restarts.

- Utilize logging tools: Use tools like Elasticsearch, Fluentd, and Kibana (EFK stack), or Prometheus and Grafana to collect and analyze logs. These logs provide insights into the restart process and help troubleshoot issues.

Troubleshooting common issues and errors encountered during pod restarts:

Despite careful planning, you might encounter common issues during pod restarts. Here are some tips for troubleshooting:

- Check pod status: Use the

kubectl get podscommand to check pod status. Look for any pods stuck in a pending or terminating state. - Review pod logs: Retrieve pod logs with

kubectl logsto investigate errors or anomalies during restarts. - Inspect events: Use

kubectl describeto inspect events related to pod restarts and identify any underlying issues reported by Kubernetes.

We hope you find these tips helpful!